Can this ChatGPT detector unerringly spot robotic idioms? Nope

Enlarge

As someone who teaches at university – and works as a journalist – I could hardly wait to try out the app created by Princeton senior Edward Tian for teachers to bust AI-generated essay submissions.

The app, GPTZero, was launched on January 2. It’s supposed to detect whether text is written by ChatGPT, the chatbot that has the facility to muse on anything and everything, in the time it takes to blink.

Mr Tian, who is majoring in computer science and minoring in journalism, clearly has a vested interest of sorts in ensuring that wordsmithing doesn’t fall entirely to robots. He says his app can “quickly and efficiently” decipher whether a human or ChatGPT authored an essay. In an initial tweet introducing GPTZero, he noted: “there’s so much chatgpt hype going around. is this and that written by AI? we as humans deserve to know!”

Within days of the new app’s launch, it had at least 30,000 try-outs, so much so it crashed.

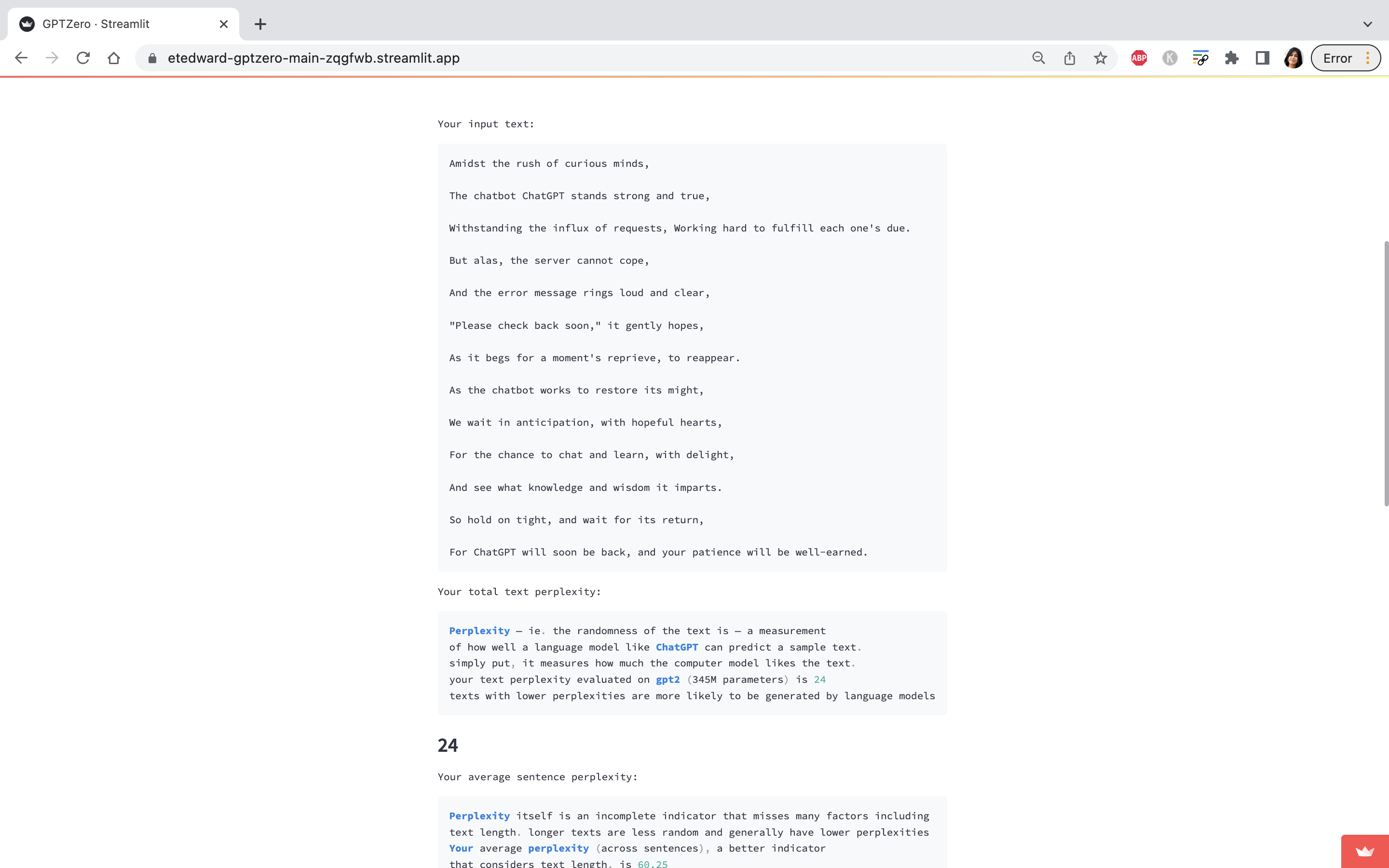

I tried it in a state of great excitement, entering ChatGPT’s sonnet (see January 10) into GPTZero.

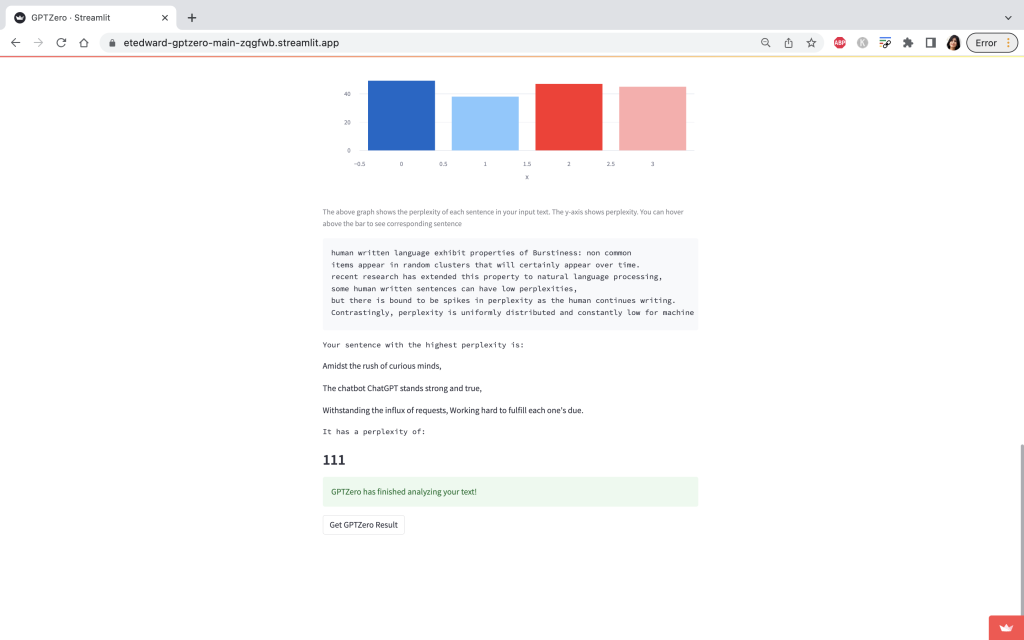

GPTZero used two indicators – “perplexity” and “burstiness” – to analyse the text. In this context, as NPR has explained, “perplexity measures the complexity of text. If GPTZero is perplexed by the text, then it has a high complexity and it’s more likely to be human-written. However, if the text is more familiar to the bot — because it’s been trained on such data — then it will have low complexity and therefore is more likely to be AI-generated. Separately, burstiness compares the variations of sentences. Humans tend to write with greater burstiness, for example, with some longer or complex sentences alongside shorter ones. AI sentences tend to be more uniform.”

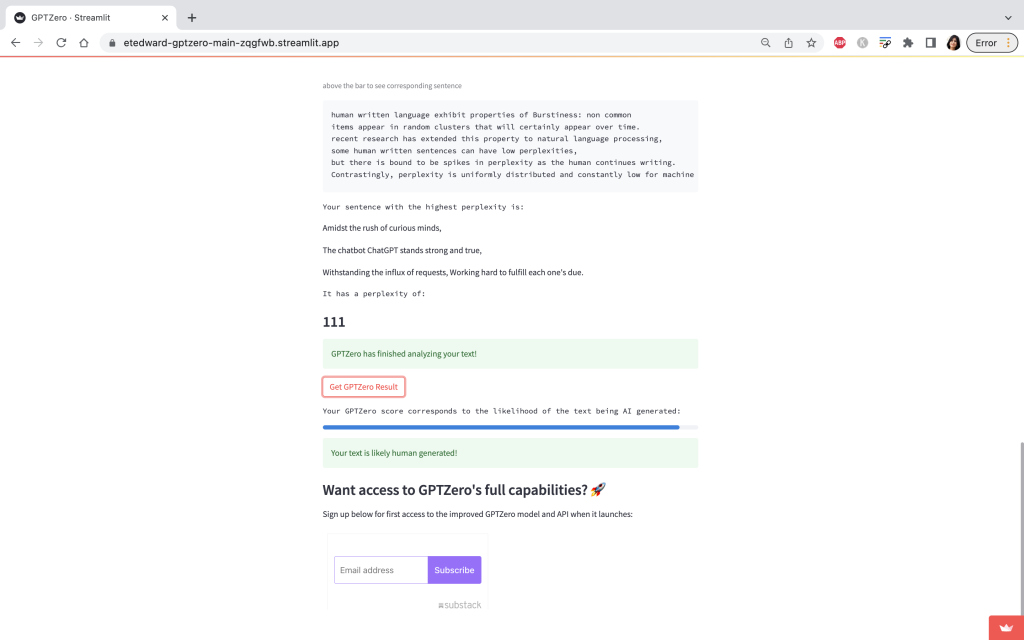

What would GPTZero’s verdict be on ChatGPT’s versifying?

It found the text to be “human-generated” (see screenshot). It was a disappointing conclusion but merely bears out something GPTZero’s creator has acknowledged: his bot isn’t foolproof.

Human perspicacity and common sense may often work rather better.

Also read: